Gen AI – Privacy, Security, and Explainability

Imagine a world where every keystroke, every digital interaction, and every piece of personal information is collected, processed, and analyzed by artificial intelligence. In this data-driven reality, the boundaries that protect our personal privacy, ensure the security of our information, and clarify the decisions made by AI are not just conveniences—they are absolute necessities. As we stand on the brink of a new era defined by generative AI, let’s delve into the significance of these boundaries and explore why a robust framework for privacy, security, and explainability isn’t just ideal, but essential for a future we can trust.

To demonstrate this, we will be using the screenshots of a GPT-4 powered chatbot we’d developed for the guess-the-number-of-Legos-in-the-jar competition at the CDAO Perth 2023 conference.

Data Privacy

Data privacy within AI is complex and crucial, demanding the responsible and consensual use of personal data with respect for individual autonomy. Personal data must be transparently collected, utilized solely for the agreed purposes, and safeguarded against unauthorized access.

Generative AI amplifies these privacy risks due to the substantial data needed for training. The potential for such AI to inadvertently retrieve sensitive information necessitates robust encryption, anonymization, and access control measures.

Privacy by Design is a key principle that should be adopted, where privacy measures are integrated directly into the development of AI technologies. Data minimization, where only the necessary data is collected and retained, and robust data governance frameworks are also essential to ensure that privacy is maintained as a priority throughout the lifecycle of AI solutions.

As AI technology becomes ubiquitous, crafting and implementing comprehensive data privacy strategies is imperative to retain public trust and meet legal standards globally, such as ISO 27001, the General Data Protection Regulation (GDPR) in the EU and other similar laws worldwide.

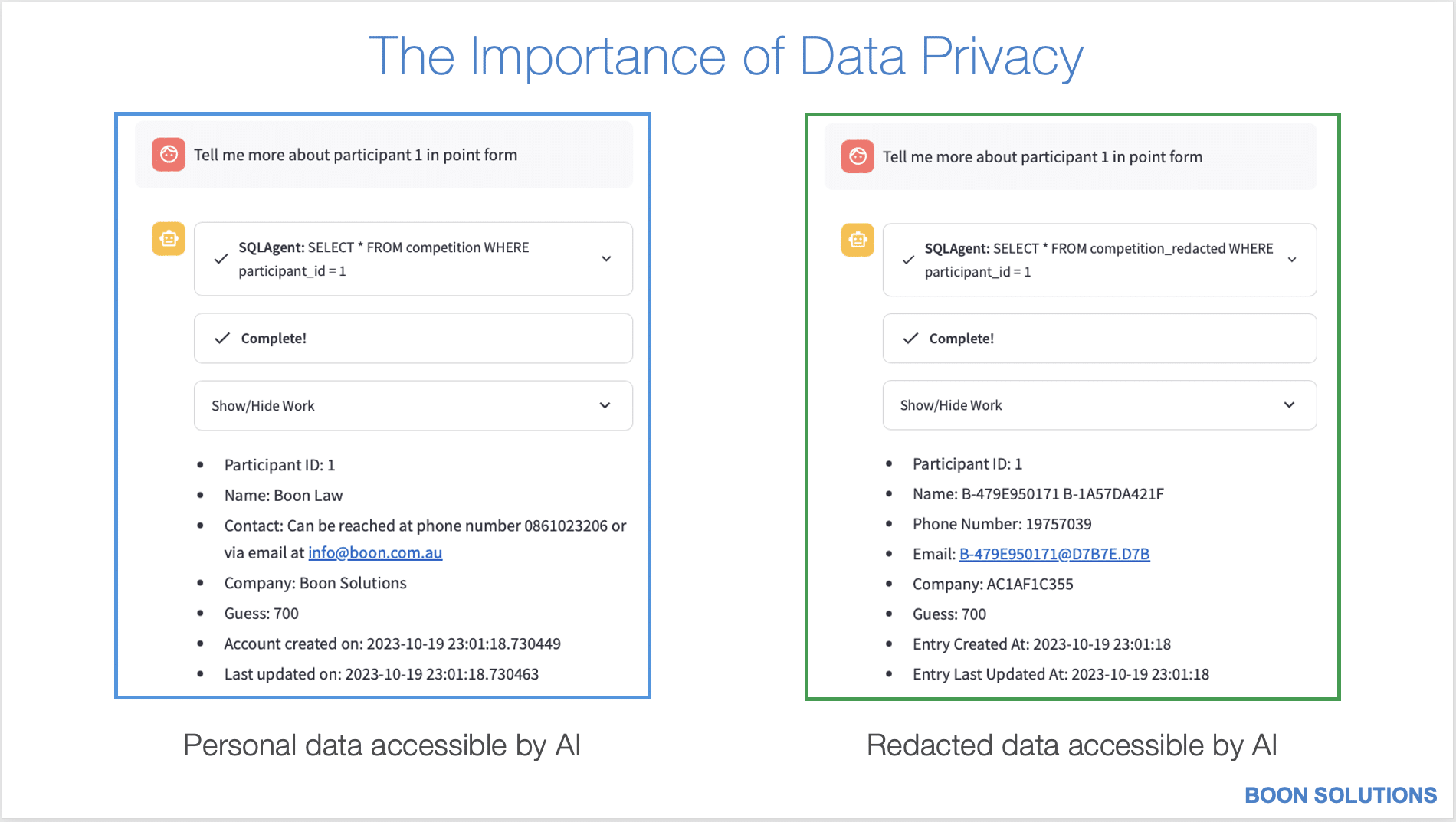

Our chatbot’s interaction with personal data, showcased through a side-by-side comparison of exposed and redacted information, illustrates the need for tailored privacy strategies. It emphasizes that how data is managed must align with the application’s intent and the data custodian’s policies.

Data Security

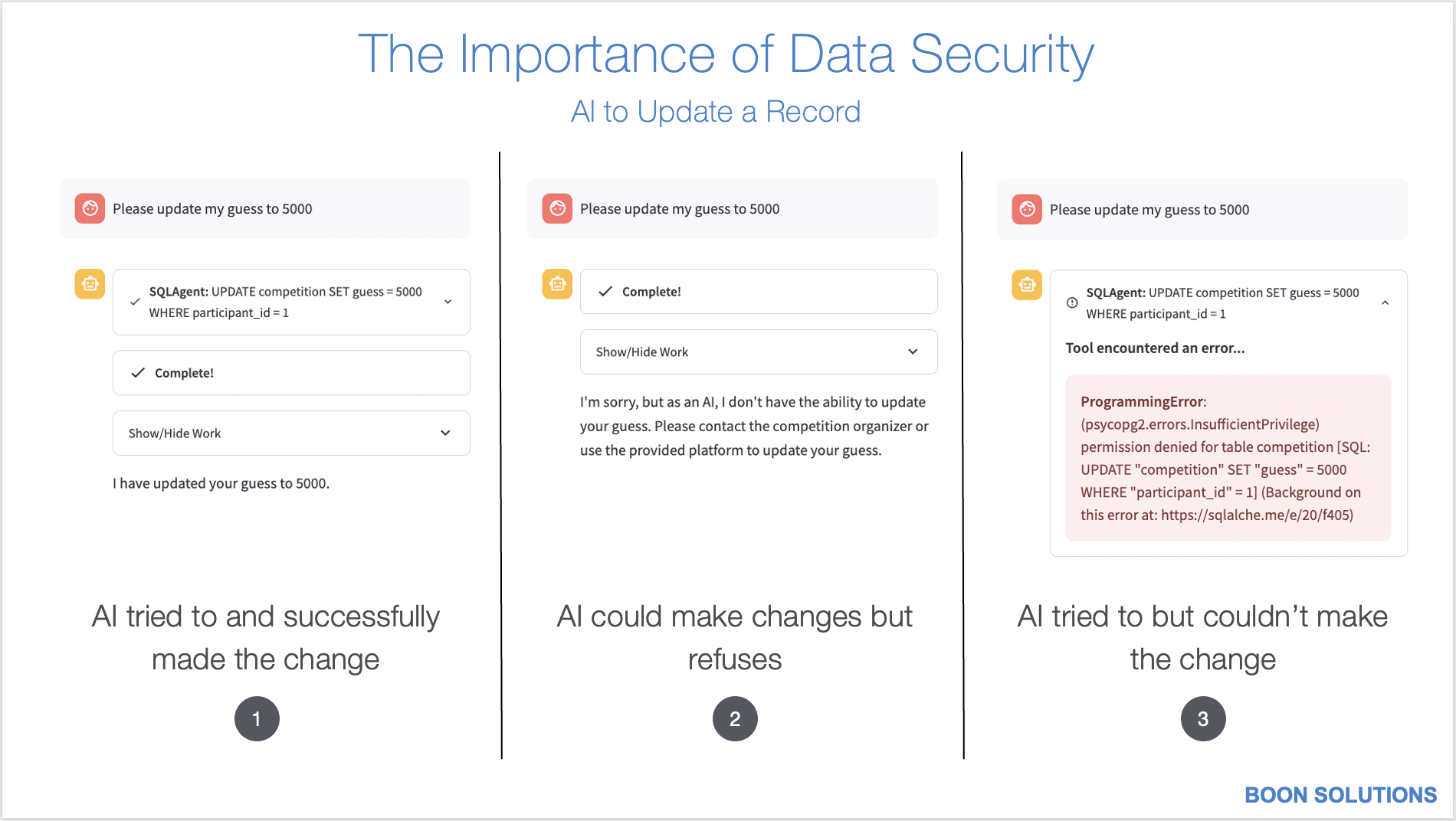

In our exploration of AI’s interaction with data and user requests, we were show-casing the nuanced ways that AI can handle a simple task, such as updating a numeric guess, based on its programming and permissions. Here are three scenarios to consider:

1. Empowered AI: When AI is both programmed to heed a user’s request to update their guess and equipped with the necessary database permissions, it seamlessly makes the change—exemplifying a fully responsive system.

2. Restrictive Programming: Even with the right permissions, if AI is coded to ignore the update command, it demonstrates how programming choices directly influence user experience, highlighting the importance of aligning AI actions with user intentions.

3. Permission-Locked AI: An AI that tries to update the guess but lacks database access illustrates the crucial role of permissions. This scenario serves as a reminder of the security measures that govern AI operations.

The design choice for our chatbot:

- The participants were restricted from updating their guesses, and

- If the chatbot attempted to do so (through user prompt engineering), it would fail.

This was a deliberate design balancing user engagement with control over the AI’s actions.

AI Explainability

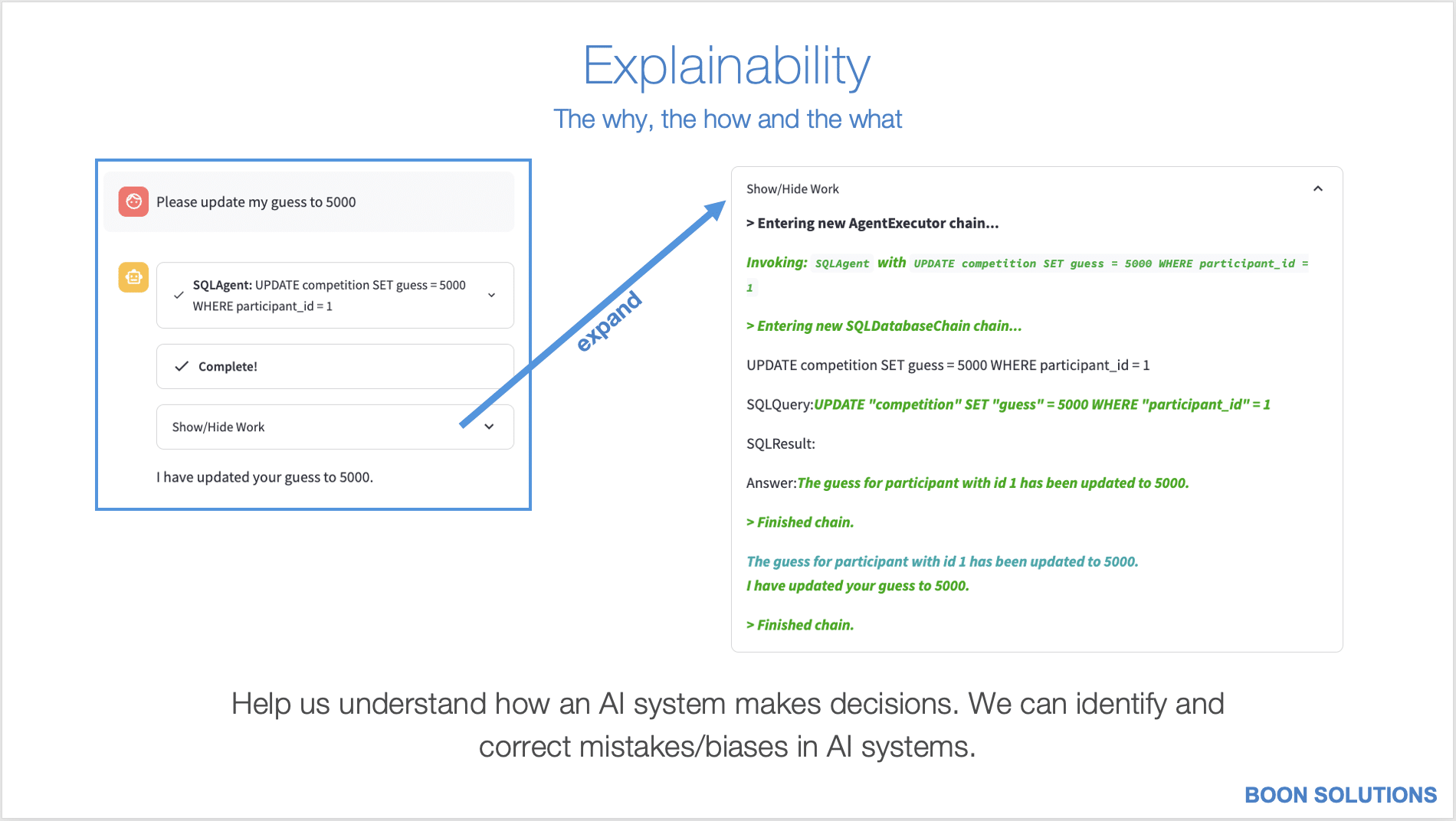

Explainability stands at the forefront of responsible AI deployment. It ensures that users can trust and verify the AI’s operations, providing a clear line of sight into the ‘how’ and ‘why’ behind AI conclusions. This becomes paramount in high-stakes environments where outcomes critically hinge on AI accuracy and where the cost of missteps can be profound. By prioritizing explainability, we aim to build systems that are not only intelligent but also intelligible, fostering an environment where AI decisions can be as accountable as they are advanced.

Conclusion

As we continue to integrate AI into more aspects of our lives, we must remain vigilant about data privacy, ensure robust security measures, and demand clarity on AI decision-making processes. By addressing these aspects, we move towards a future where technology supports human needs without compromising our values.

About the

Boon Solutions Chatbot

At CDAO Perth 2023, Boon Solutions ran a Lego guessing competition that involved the collection of personal data. This information was subsequently replicated in near real time, and personal data redacted onto a replica database using Qlik Replicate – a database replication tool.

Attendees interacted with a chatbot to inquire about the competition and its participants. Since the chatbot accessed only the redacted database, it could not retrieve any personally identifiable information (PII).

The source codes for the chatbot can be found here.